Developer Guide

Twitter API Toolkit for Google Cloud: Enterprise API

By Prasanna Selvaraj

Detecting trends from Twitter can be complex work. Twitter APIs offer several options for receiving and processing Tweets on the fly. In order to categorize trends, Tweet themes and topics must be identified—another potentially complex endeavor as it involves integrating with NER (Named Entity Recognition) / NLP (Natural Language Processing) services.

The Twitter API Toolkit for Google Cloud: Enterprise API solves these challenges and supports the developer with a trend detection framework that can be installed on Google Cloud in about 60 minutes or less.

Why use the Twitter API toolkit for Google Cloud: Enterprise API?

Twitter API Toolkit for Google Cloud: Enterprise API - framework can be used to detect macro and micro-level trends across domains and industry verticals

Designed to horizontally scale and process higher volumes of Tweets in the order of millions of Tweets/day

Automates the data pipeline process to ingest Tweets into Google Cloud

Visualization of trends in an easy-to-use dashboard

How much time will this take? 60 mins is all you need

In 60 minutes, or less, you’ll learn the basics of the Twitter API and Tweet annotations—plus, you’ll gain experience in Google Cloud, Analytics, and the foundations of data science.

What Cloud Services this toolkit will leverage and what are the costs?

- This toolkit requires a Twitter Enterprise API account (pricing info will be provided by your account manager); sign-up here

- This toolkit leverages Google BigQuery, App Engine, and DataStudio. For information on pricing, refer to the Google Cloud pricing

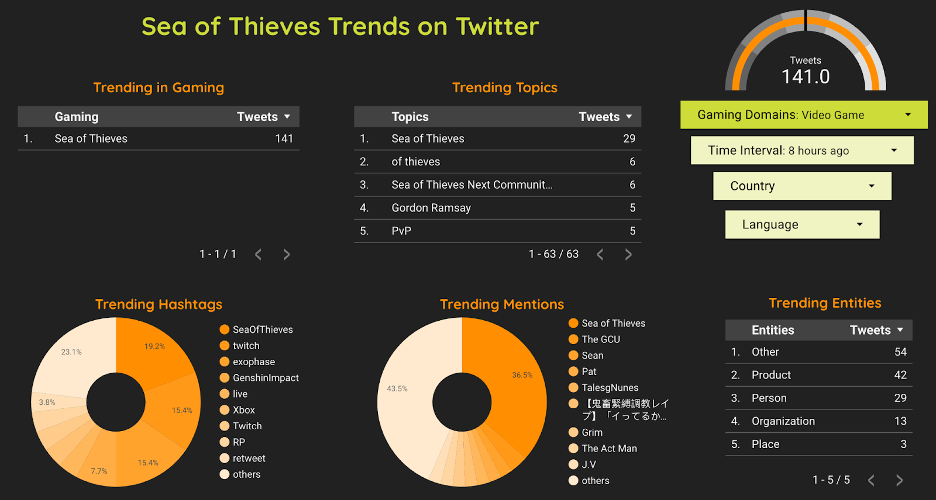

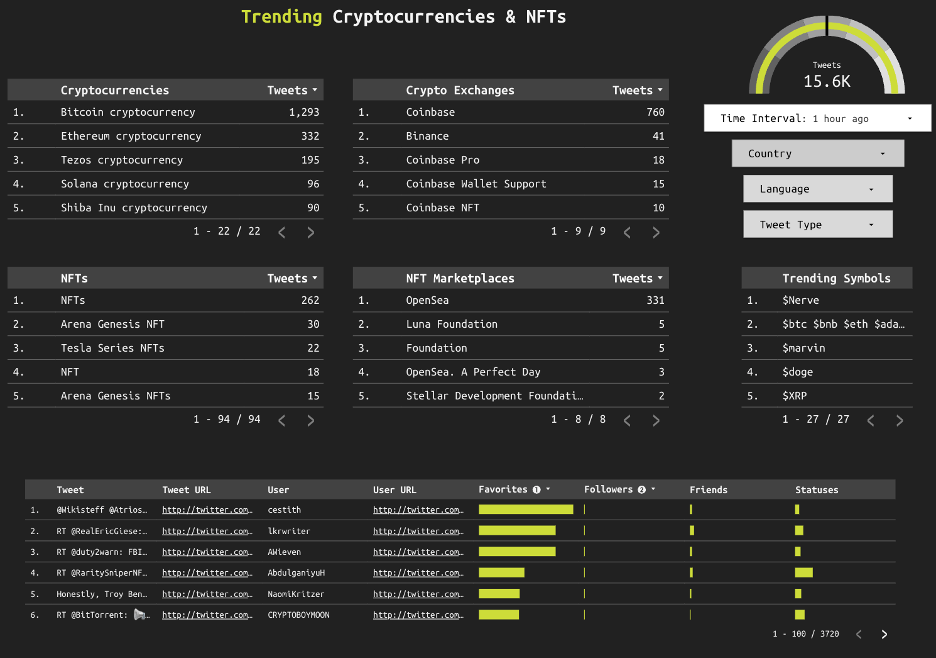

What kind of dashboard can you build with the toolkit?

- Below are a few real-time dashboard illustrations that are built with the Filtered Stream toolkit.

Fig 1, depicts a real-time ‘Gaming’ dashboard that depicts the conversations on Video games on Twitter. You can get insights on the trending topics in Gaming, popular hashtags, and the underlying Tweets that are streamed in real-time.

Similarly, Fig 2 depicts the real-time analysis of the ‘Cryptocurrencies and Digital Assets’

This toolkit helps you to build a real-time trend detection dashboard

Monitor real-time trends for configured rules as they unfold on the platform

Give me the big picture

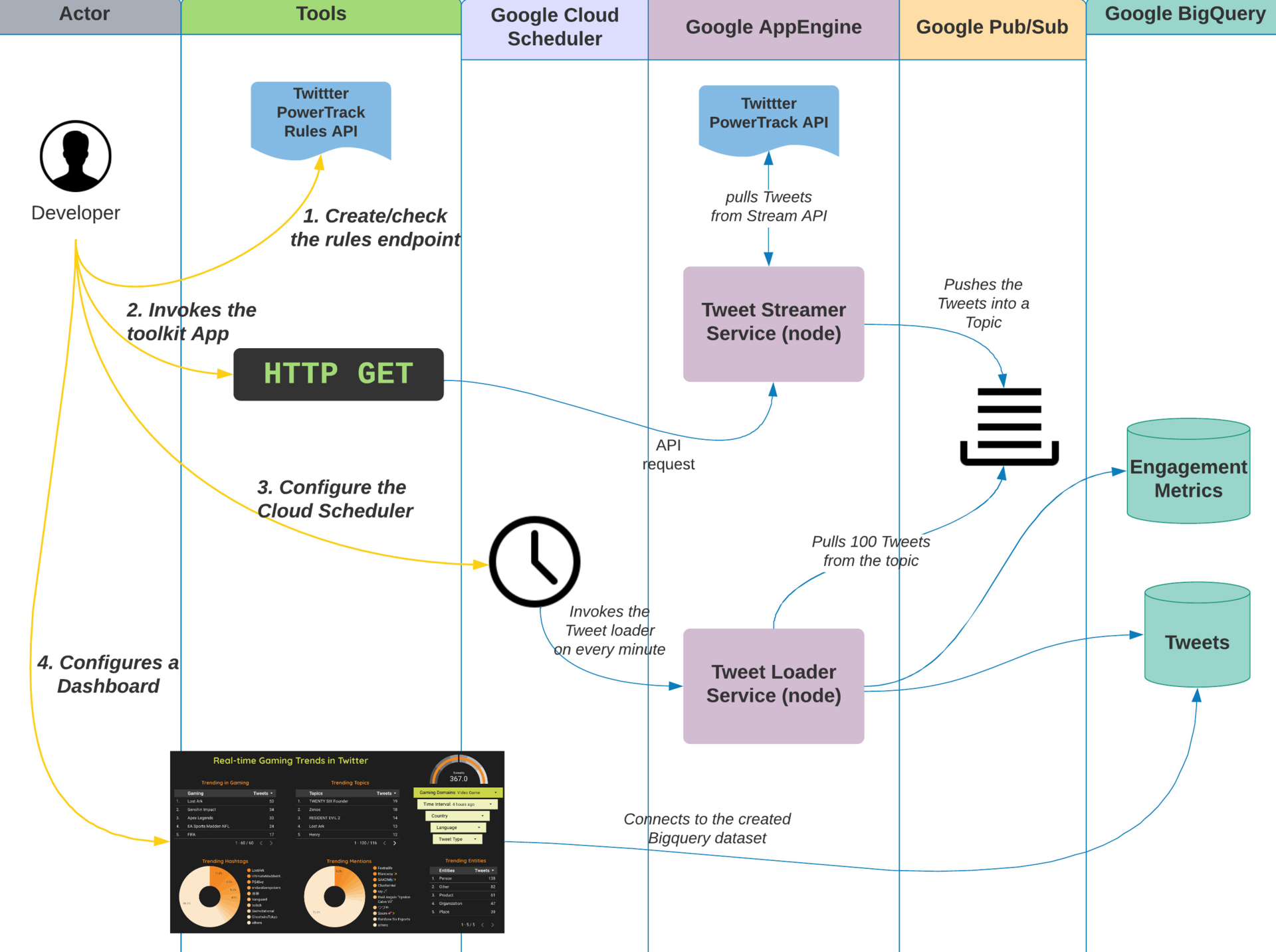

This toolkit is comprised of 5 core components that are responsible for listening to real-time Tweets, pushing Tweets to a topic/queue, a CRON job that triggers a Tweet loader service which pulls the Tweets from the topic/queue to store them in a database, and, finally, Tweet visualization in a dashboard that connects to the database via a SQL query.

Tweet streamer service (nodeJs component)

The Tweet streamer service is responsible for listening to the real-time Twitter PowerTrack API and pushes the Tweets temporarily to a Topic that is based on Google PubSub. The PowerTrack rules are governed by the PowerTrack Rules API.

Stream topic based on Google PubSub

The stream topic based on Google PubSub acts as a shock absorber for this architecture. When there is a sudden surge of Tweets, the toolkit can handle the increased volume of Tweets with the help of PubSub. The PubSub topic will act as temporary storage for the Tweets and batch them for the Tweet loader service to store the Tweets in the database. Also, this will act as a shield to protect the database from a huge number of ingesting calls to persist the Tweets.

CRON job based on Google Cloud Scheduler

A CRON job based on Google Cloud Scheduler will act as a poller that will trigger the Tweet loader service at regular intervals.

Tweet loader service (nodeJs component)

The Tweet loader service that gets triggered based on the CRON job will pull the Tweets in a batch mode (i.e. 500 Tweets per pull, configurable via config.js file), and store the Tweets in a BigQuery database.

Google DataStudio as a Dashboard for analytics

Google DataStudio is used to create a dashboard for trend detection and connects to the BigQuery via a SQL query with a time interval as a parameter. The time interval is a range (30 minutes, 60 minutes) that will be used to query the Tweets based on their creation time. Trends can be analyzed based on the time interval which can range from minutes to hours. For example, you can analyze trends “60 minutes ago”, passing the time interval variable to the SQL query.

As a user of this toolkit, you need to perform four steps:

Add rules to the stream with the PowerTrack rules API endpoint

Install and involve the toolkit from GitHub in your Google Cloud project

Configure the CRON job - Google Cloud Scheduler

Configure the dashboard, by connecting to the BigQuery database with DataStudio

Prerequisites: As a developer, what do I need to run this toolkit?

Twitter Enterprise API access(pricing info will be provided by your account manager); Sign up here.

- [Optional] Get a Twitter API bearer token for accessing Engagement API. Refer to this doc.

A Google Cloud Account. Sign up here

How should I use this toolkit? - Tutorial

Step One: Add rules to the stream

curl -v -X POST [email protected] "https://data-api.x.com/rules/powertrack/accounts/companyname/publishers/twitter/prod.json" -d '{"rules":[{"value":"(#bitcoin OR bitcoin OR "Doge coin" OR from:noaa) lang:en","tag":"Crypto"}]}'

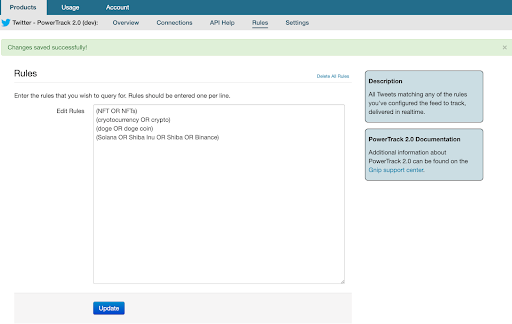

2. Alternatively, you can add rules via the GNIP console. You should also have access to the GNIP console. Navigate to the GNIP console and select the PowerTrack stream you want to listen to with this application and click “Rules”.

3. In the “Edit Rules” dialog section, enter the rules and click “Update”. The rules must be compliant with the PowerTrack rules language

4. An example PowerTrack rule below for monitoring keywords related to Cryptocurrencies and NFT.

(NFT OR NFTs) (cryotocurrency OR crypto) (doge OR doge coin) (Solana OR Shiba Inu OR Shiba OR Binance)

Step Two: Install and configure the toolkit (Tweet Streamer and Loader service)

Access the Google Cloud console and launch the “Cloud Shell”. Ensure you are on the right Google Cloud Project

- Set the Google Project ID and enable BigQuery API by running the following commands:

gcloud config set project <<PROJECT_ID>>

gcloud services enable bigquery.googleapis.com

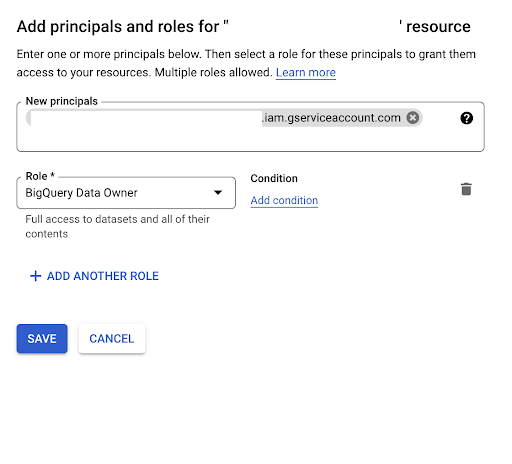

4. Ensure you have access to the BigQuery data owner role. Navigate to Google Cloud Console -> Choose IAM under the main menu -> Add a new IAM permission

Principal: Your Google account email address

Role: BigQuery Data Owner

5. From the “Cloud shell terminal” command prompt, download the code for the toolkit be executing the command:

git clone https://github.com/twitterdev/gcloud-toolkit-power-track.git

6. Navigate to the source code folder

cd gcloud-toolkit-power-track

7. Make changes to the configuration file. Use your favorite editor like vi or emacs

Once you’ve made the following changes, save them and quit the editor mode.

vi config.js

Edit config.power_track_api object in config.js

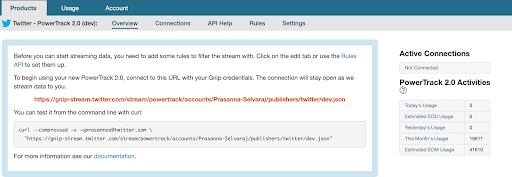

a. Update the “pt_stream_path” with the “Stream Path” from the GNIP console under the “Overview” section. Copy the stream URL from the string “/stream”

b. Update the GNIP username and password. These are the credentials required to log into the GNIP console

c. Update the Twitter Bearer token

Twitter API bearer token (ensure the word ‘Bearer’ must be prepended before the token with a space

Edit config.gcp_infra object in config.js

d. Update the Google project ID

e. Update the “topicName” and “subscriptionName” with any unique string. Recommend something like “trends-topic” for topic name and “trends-sub” for subscription name

f. The “messageCount” attribute is the maximum number of messages that would be pulled from the topic by the stream loader service

g. The “streamReconnectCounter” represents the number of times stream loader service will withstand empty message pulls from topics before reconnecting. Recommend to leave it as 500 (max) and 3, however, would require calibration based on the topic you are listening to.

Edit config.gcp_infra.bq object in config.js

h. Update the “dataSetId” attribute with a unique string. Recommend “TrendsDS”

Edit config.gcp_infra.bq.table object in config.js

i. Update the “tweets” and “engagement” attributes with unique table names. Recommend “TweetTrends” and “Engagements”

gcloud app logs tail -s default

8. Back in the cloud shell, deploy the code in AppEngine by executing the below command: Note that the deployment can take a few minutes.

gcloud app deploy

Authorize the command

If prompted:

Choose a region for deployment like (18 for USEast1)

Accept the default config with Y

9. After the deployment, get the URL endpoint for the deployed application with the command:

gcloud app browse -s default

The above command will output an app endpoint URL similar to this one:

https://trend-detection-dot.uc.r.appspot.com/

10. Use the endpoint URL from the output of step h) and make a CURL or browser request with “/stream” appended to the request path. This will invoke the toolkit and it starts listening to the Tweets as defined by the rules in the “stream/rules” endpoint.

curl https://<<APP_ENDPOINT_URL>>/stream

11. Start tailing the log file for the deployment application

gcloud app logs tail -s default

12. If the stream is not connected, reconnect to the stream with the below command:

curl https://<<APP_ENDPOINT_URL>>/stream

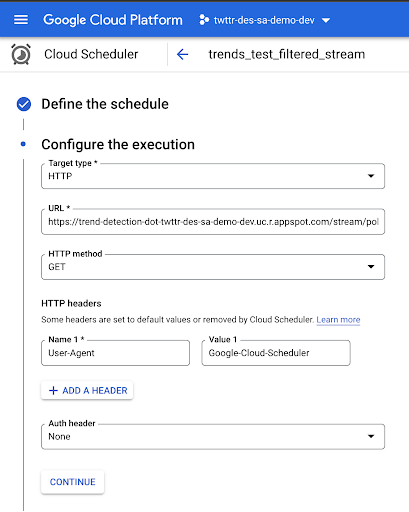

Step Three: Configure the CRON job - Google Cloud Scheduler

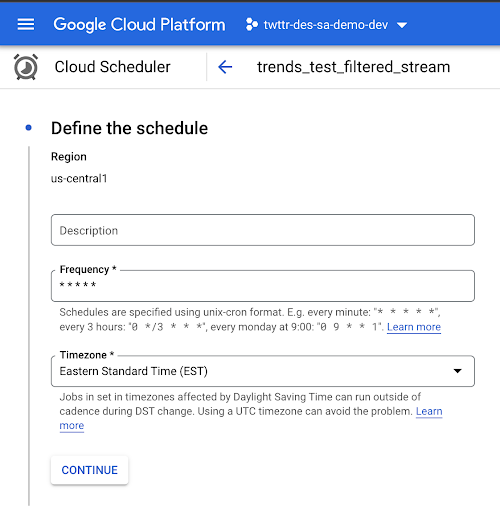

Create a Google Cloud Scheduler Job by navigating to the Google Cloud console and clicking the Cloud Scheduler option under the main menu.

Create a new Cloud Scheduler job and define the schedule with frequency as below: Ensure a space between each asterisk like “* * * * *”

This will ensure that the Cloud Scheduler job triggers every minute.

* * * * *

3. Configure the execution with “Target Type” as “HTTP” and insert your application endpoint URL as below:

https://<<YOUR_APP_ENDPOINT_URL>>/stream/poll/2/30000

The request “/stream/poll” points to the “Tweet loader” service. Parameters 2 and 30000 refer to the invocation frequency of the Tweet loader service and the time interval in milliseconds. For example “2/30000” will invoke the “Tweet loader” service 2 times within a minute with a delay of 30000 milliseconds or 30 seconds. If you anticipate more Tweets for a topic, increase the invocation frequency and decrease the delay to increase the consumption. This is a calibration that can be fine-tuned based on monitoring of a specific topic like “Crypto” or “Doge coin”

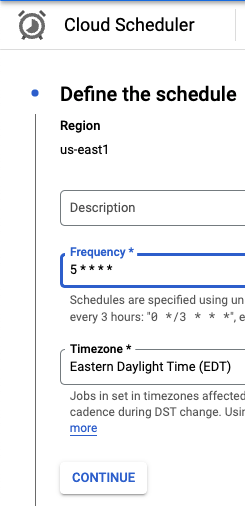

[Optional] Step four: Configure the CRON job for Tweet engagements - Google Cloud Scheduler

Create a Google Cloud Scheduler Job by navigating to the Google Cloud console and clicking the Cloud Scheduler option under the main menu.

Create a new Cloud Scheduler job and define the schedule with frequency as below: Ensure a space between each asterisk like “5 * * * *”

5 * * * *

This will ensure that the Cloud Scheduler job triggers every hour at minute 05.

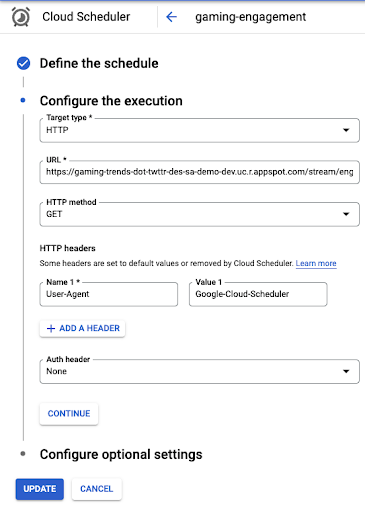

3. Configure the execution with “Target Type” as “HTTP” and insert your application endpoint URL as below:

https://<<YOUR_APP_ENDPOINT_URL>>/stream/engagement

Set the HTTP method to GET and add an HTTP header of name User-Agent and value Google-Cloud-Scheduler.

The request “/stream/engagement” triggers the Engagement API /totals endpoint and saves the engagement metrics in the Engagement table defined in the config.js file. You can get the most “liked” Tweet or Tweet with the most video views by joining the Engagement table with the Tweet table.

Step five: Configure the Trends dashboard with Google DataStudio

SQL query to be used for Trend detection in DataStudio

Replace your <<datasetId.table_name>> in the below SQL. The ‘datasetId’ and ‘tweet_table_name’ can be determined from the config.js file

SELECT

context.context_entity_name AS CTX_ENTITY, context.context_domain_name AS CTX_DOMAIN_NAME, context.context_domain_id AS CTX_ID, entity.normalized_text as NER_TEXT,

created_at, entity.type as NER_TYPE, GT.text as TWT_TEXT, GT.tweet_url AS TWT_URL, GT.user.screen_name AS USR_SCR_NM, GT.user.user_url AS USR_URL,

GT.user.followers_count AS FOLLOWERS_CT, GT.user.friends_count AS FRNDS_CT, GT.user.favourites_count AS FAV_CT, GT.user.statuses_count AS STS_CT, U_MEN.name as USR_MNTS, HASH_T.text as HASH_TAGS, GT.lang AS LANG, LOC.country AS CNTRY, GT.tweet_type as TWT_TYP,

COUNT(*) AS MENTIONS

FROM

`<<datasetID.table>>` AS GT,

UNNEST(entities.annotations.context) AS context,

UNNEST(entities.annotations.entity) AS entity,

UNNEST(entities.user_mentions) AS U_MEN,

UNNEST(entities.hashtags) AS HASH_T,

UNNEST(user.derived.locations) AS LOC

where created_at > DATETIME_SUB(current_datetime(), INTERVAL @time_interval MINUTE)

GROUP BY

CTX_ENTITY, CTX_DOMAIN_NAME, NER_TEXT, NER_TYPE, CTX_ID, created_at, TWT_TEXT, TWT_URL, USR_SCR_NM, USR_URL, FOLLOWERS_CT, FRNDS_CT, FAV_CT, STS_CT, USR_MNTS, HASH_TAGS, LANG, CNTRY, TWT_TYP

ORDER BY

MENTIONS DESC

3. SQL to be used for engagement metrics

WITH ENGAGEMENT AS(

SELECT id, favorites, replies, retweets, quote_tweets, video_views, ROW_NUMBER() OVER (PARTITION BY id ORDER BY created_at desc) as row_num

FROM `<<DATASET.ENGAGEMENT_TABLE>>`

ORDER BY favorites DESC

),

TWEETS AS(

SELECT id, tweet_url, tweet_type, entities, lang, created_at FROM `<<DATASET.TWEET_TABLE>>`

)

SELECT E.id as TWT_ID, E.favorites as FAVS, E.replies AS REPLIES, E.quote_tweets AS QUOTES, E.retweets AS RETWEETS,

E.video_views AS VIDEO_VIEWS, G.lang AS LANG, G.tweet_url AS TWT_URL, G.created_at AS CREATED_AT, G.tweet_type AS TWT_TYP, G.entities

FROM ENGAGEMENT AS E JOIN TWEETS AS G ON e.id = G.id WHERE E.row_num = 1

Step six: Twitter Compliance

It is crucial that any developer who stores Twitter content offline ensures the data reflects user intent and the current state of content on Twitter. For example, when someone on Twitter deletes a Tweet or their account, protects their Tweets, or scrubs the geoinformation from their Tweets, it is critical for both Twitter and our developers to honor that person’s expectations and intent. The batch compliance endpoints provide developers an easy tool to help maintain Twitter data in compliance with the Twitter Developer Agreement and Policy.

Optional - Delete the Google cloud project to avoid any overage costs

gcloud projects delete <<PROJECT_ID>>

Troubleshooting

Use this forum for support, issues, and questions.