Developer Guide

Twitter API Toolkit for Google Cloud: Recent Search

By Prasanna Selvaraj

Twitter API developers often grapple with processing, analyzing, and visualizing a higher volume of Tweets to derive insights from Twitter data. This entails developers having to build data pipelines, select storage solutions, and choose analytics and visualization tools as the first step before they can start validating the value of Twitter data.

The Twitter API toolkit for Google Cloud is a framework for ingesting, processing, and analyzing higher volumes of Tweets that can be installed and deployed by developers in less than 30 minutes. The toolkit accelerates time-to-value and enables developers to surface insights from Twitter data, easily and quickly. The Twitter API toolkit leverages Twitter's new recent search API v2 that returns Tweets from the last seven days that match a specific search query.

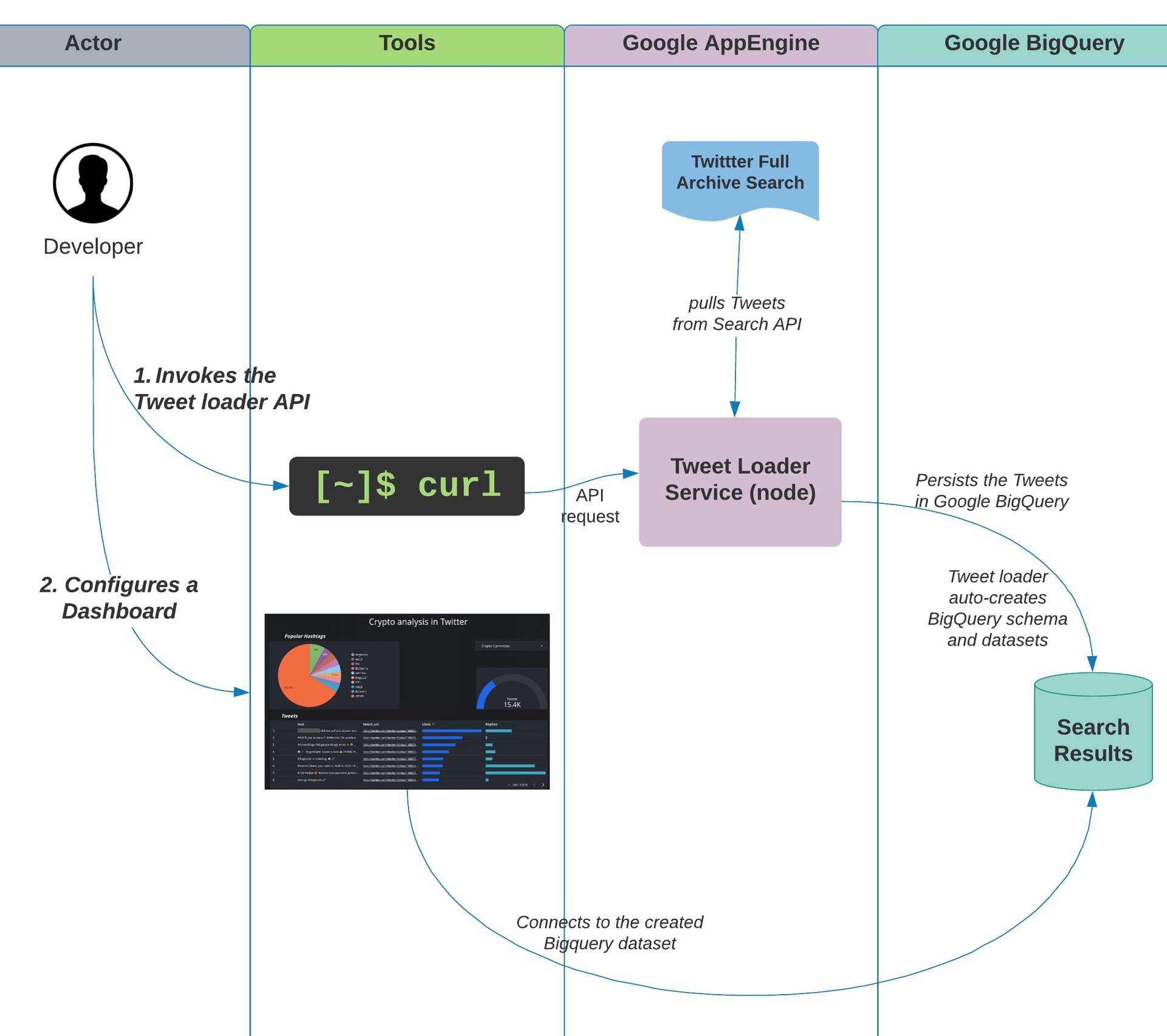

The Twitter API toolkit for Google Cloud leverages BigQuery for Tweet storage, DataStudio for business intelligence and visualizations, and App Engine for data pipeline.

Why use the Twitter API Toolkit for Google Cloud: Recent Search?

Process, analyze, and visualize higher volumes of Tweets (millions of Tweets and the design is scalable to billions of Tweets

Automates the data pipeline process to ingest Tweets into Google Cloud

Use this toolkit to find impactful Tweets to your use case quickly

Visualization of Tweets, slicing, and dicing with Tweet metadata

How much time will this take? 30 mins is all you need

If you can spare 30 minutes, please proceed. You will learn the basics about Twitter API, and as a side benefit, you will also learn about Google Cloud, Analytics, and the foundations of data science.

What Cloud Services this toolkit will leverage and what are the costs?

- This toolkit requires a Twitter API account that is free to signup for essential access. Essential access allows 500K Tweets/month

- This toolkit leverages Google BigQuery, App Engine, and DataStudio. For information on pricing, refer to the Google Cloud pricing

What kind of dashboard can you build with the toolkit?

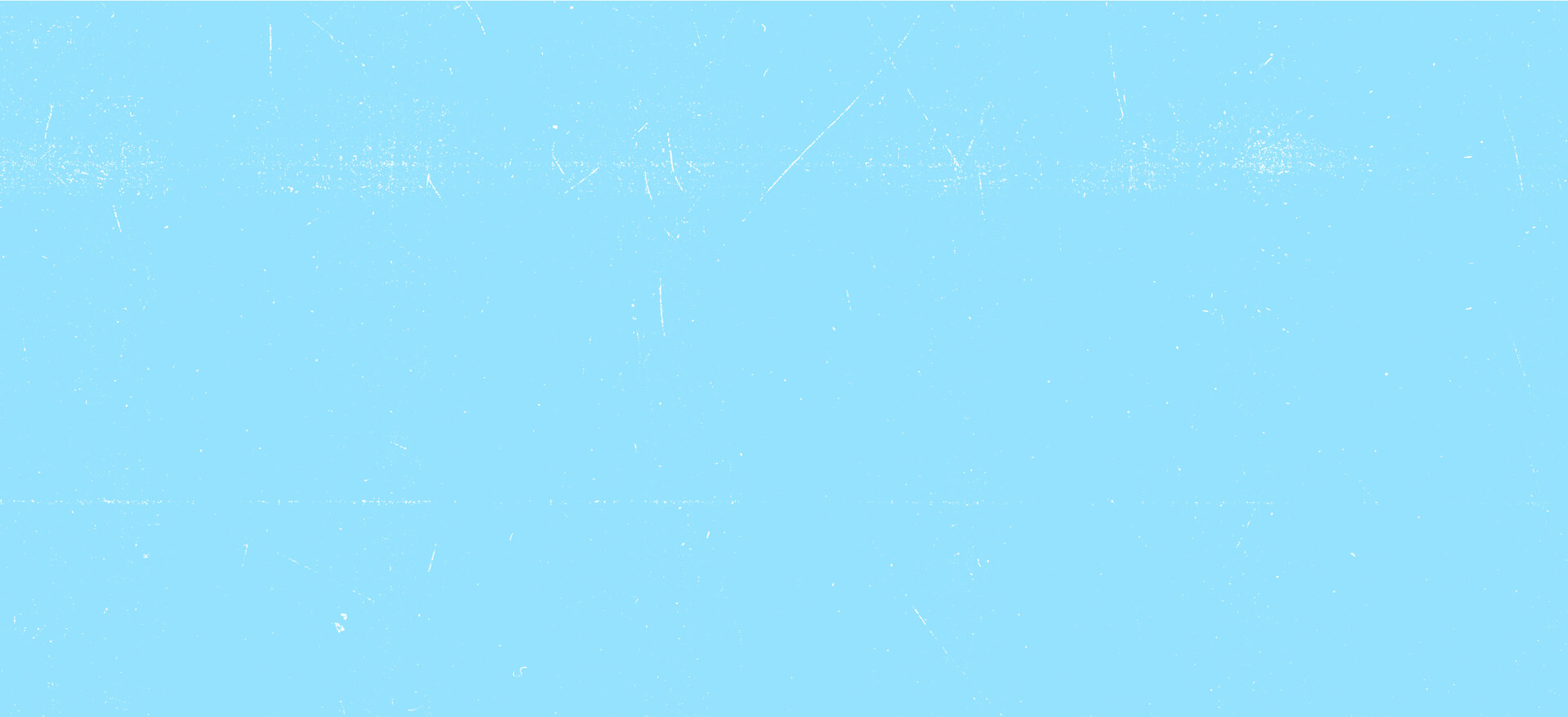

This toolkit helps you to build a dashboard for analysis of the Tweets ingested by the toolkit into the Google Cloud

The dashboard above is an example built with the toolkit that illustrates a Tweet dataset of ‘Crypto currencies’

The dashboard will allow a user to slice and dice the Tweets by popular hashtags and by engagement metrics

A user can find impactful Tweets that matter to their business case by applying the “popular hashtags” filter or using the engagement metrics to sort and narrow down to the Tweets with the highest engagement

Give me the big picture

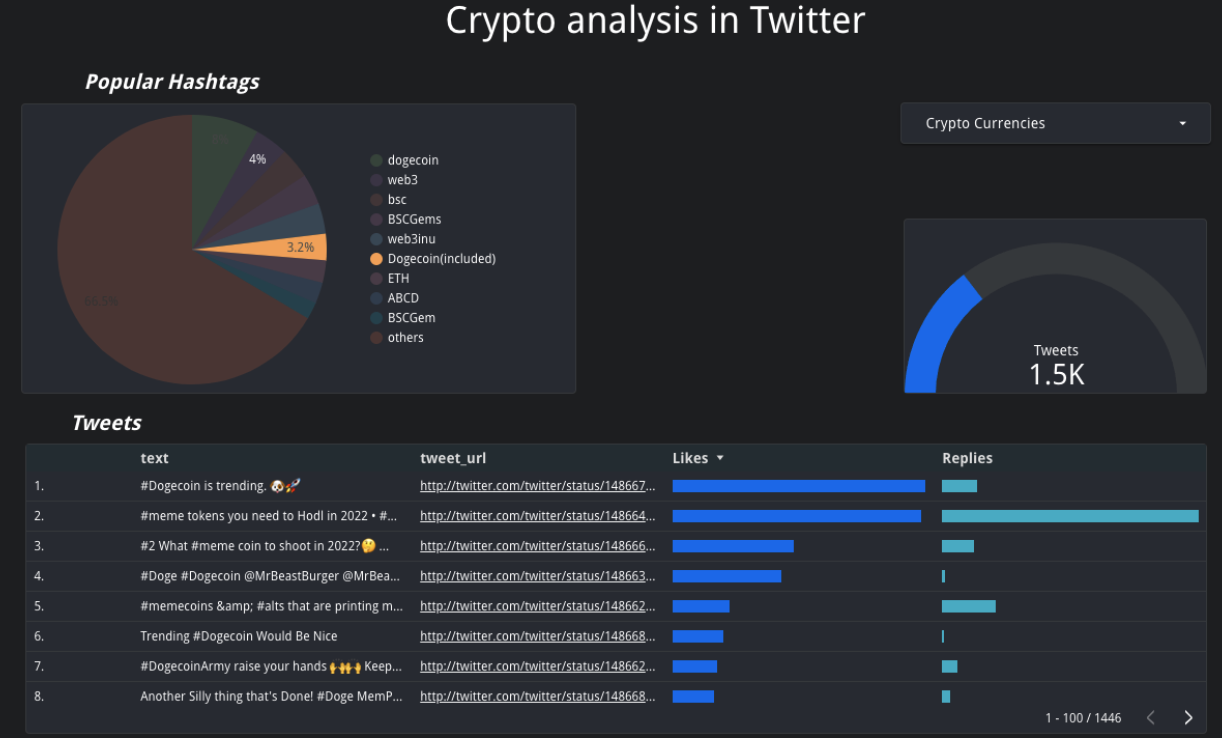

As a user of this toolkit, you need to perform two steps:

Install the Tweet loader application in the Google Cloud App engine and invoke the application with a CURL command that is the API request to ingest the Tweets

Configure a dashboard, by connecting to the BigQuery database with DataStudio

Prerequisites: As a developer, what do I need to run this toolkit?

Twitter Developer account Sign up here

How to get a Twitter API bearer token? Refer this

- A Google Cloud Account Sign up here

Optional Step: If you prefer to manually load the Tweets with a JSON file and visualize the Tweets, follow the video tutorials. Skip to Step One to load Tweet with data loader

- Manually load the Tweets

2. Visualize the Tweets with DataStudio

How should I use this toolkit? - Tutorial

Step One: Install the Tweet loader application

- Github Repo

- Access Google Cloud console and launch the “Cloud Shell”. Ensure you are on the right Google Cloud Project

- At the command prompt, download the code for the toolkit by executing the command:

git clone https://github.com/twitterdev/gcloud-toolkit-recent-search

4. Navigate to the source code folder:

cd gcloud-toolkit-recent-search/

5. Make changes to the configuration file. Use your favorite editor, something like vi or emacs

vi config.js

Edit line #3 in config.js by inserting the Twitter API bearer token (ensure the word ‘Bearer’ must be prepended before the token with a space

Edit line#4 in config.js by inserting the Google Cloud project id

6. Set the Google Project ID

gcloud config set project <<PROJECT_ID>>

7. Deploy the code in AppEngine by executing the below command:

gcloud app deploy

Authorize the command

Choose a region for deployment like (18 for USEast1)

Accept the default config with Y

8. After the deployment, get the URL endpoint for the deployed application with the command:

gcloud app browse

9. Enable BigQuery API

gcloud services enable bigquery.googleapis.com

Step Two: Load the Tweets with the CURL command

- Get the URL endpoint of the deployed Tweet loader application by executing the below command in the Cloud shell

gcloud app browse

2. Execute the below CURL command with the URL from step #1 and append it with the URL path “/search"

You might need to change the dates to within recent 7 days

curl -d '{

"recentSearch" : {

"query" : "Apple AirTag",

"maxResults" : 100,

"startTime" : "2022-10-24T17:00:00.00Z",

"endTime" : "2022-10-26T17:00:00.00Z",

"category" : "Tracking Devices",

"subCategory" : "Wireless Gadgets"

},

"dataSet" : {

"newDataSet" : true,

"dataSetName" : "Gadgets"

}

}' -H 'Content-Type: application/json' https://<<Tweet loader URL>>.appspot.com/search

- Tweet loader parameters

- "recentSearch/query" : Twitter Recent Search compliant query

- "recentSearch/maxResults" : The maximum number of Tweets per API call. The max limit is 100. If search query results in more than 100 Tweets, the Tweet loader will automatically paginate the API result set and persist the Tweets. If the search result is more than 500K, you will get rate limiting errors. If you have Twitter API elevated access, more than 500K Tweets can be persisted.

- "recentSearch/startTime" and "recentSearch/endTime" : ISO 8601/RFC3339 YYYY-MM-DDTHH:mm:ssZ. The startTime must be within the recent 7 days. The startTime must not be greater than the endTime.

- "recentSearch/category" and "recentSearch/subCategory" : These are discriminators that can be used to tag the Tweet loader queries with a unique name. These tags can be used in the reporting to filter data based on the Tweet loader queries.

- "dataSet/newDataSet" : When set to "true", a new dataset is created in BigQuery. If you want to append Tweet loader results to the same dataset set this to "false"

- "dataSet/dataSetName" : An unique name for the database. For example "Games_2021"

Step Three: Visualize the Tweets in Google DataStudio

Step Four: Twitter Compliance

It is crucial that any developer who stores Twitter content offline ensures the data reflects user intent and the current state of content on Twitter. For example, when someone on Twitter deletes a Tweet or their account, protects their Tweets, or scrubs the geoinformation from their Tweets, it is critical for both Twitter and our developers to honor that person’s expectations and intent. The batch compliance endpoints provide developers an easy tool to help maintain Twitter data in compliance with the Twitter Developer Agreement and Policy.

Optional - Delete the Google cloud project to avoid any overage costs

gcloud projects delete <<PROJECT_ID>>

Troubleshooting

Use this forum for support, issues, and questions.