Product News

Prototyping in production for rapid user feedback

By Daniele 3 December 2020

By Daniele

3 December 2020

We’re on a journey to rebuild the Twitter API from the ground up. Throughout the years, our team has released many endpoints on our platform. We’ve identified about a hundred endpoints to replace with updated versions, and we want to release them as fast as possible. We need our engineers to focus on shipping the new API, but we also want to work closely with developers to see what they think of our intended design before we launch. How can we include user feedback while aiming for a fast paced release schedule?

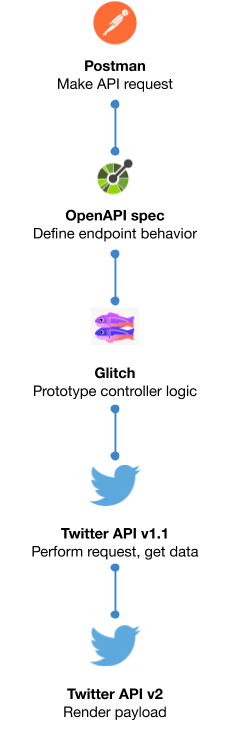

Instead of simply telling developers what we have in mind, we agreed that we want to show developers a tangible prototype of what an existing v1.1 endpoint would look and feel like in v2. We started to look into prototyping options that didn’t require large internal coordination and can be spun up and wound down easily. Eventually, we aligned on an uncommon (for us) workflow:

- Design an OpenAPI spec with the v2 functionality to prototype

- Use the public v1.1 functionality as a “backend”

- Create a route controller and serve it using Glitch

- Have the route controller render the response using the new Twitter API response format

- Make requests using a dedicated Postman collection

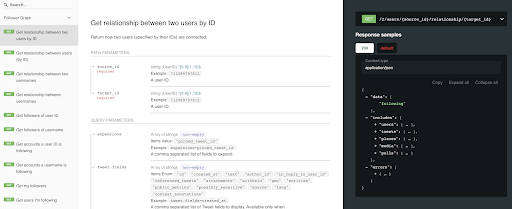

Since we use OpenAPI to define how the endpoint will behave, we were able to turn the specification into human readable documentation at no additional development cost. We used ReDoc to render our prototype API reference:

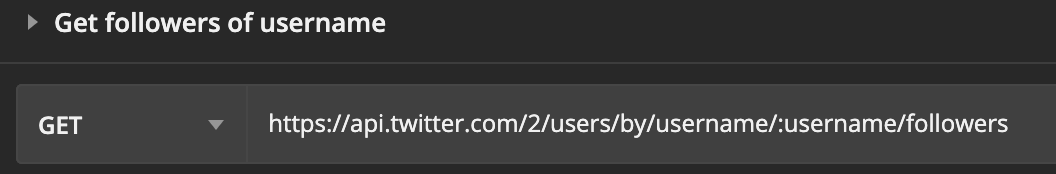

Having defined that, we created a Postman collection that contained the same routes. During user research, we wanted developers to feel and notice every aspect of the API, including its URL and request method. In Postman, they saw URLs like this one:

Except this URL would not exist and resolve in a 404. How did we make it work?

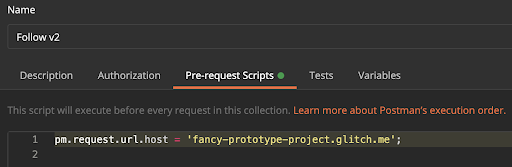

Behind the scenes, we used Postman’s pre-request scripts to swap api.twitter.com with the hostname of the Glitch project that we used for testing.

On Glitch, we hosted the logic for routing, validating, and serving the request. We used a Node backend with Express. That allowed us to attach common validation functionality and authenticate every request through middleware that gets triggered before serving the request. Since we designed the prototype functionality through an OpenAPI contract, we did not have to create custom logic for validating the request. Instead, we used express-openapi-validator to apply validation before hitting the controller; this package also provides a developer experience very similar to the validation and error messages we present in production.

The controller itself is actually straightforward: it maps a request to a v1.1 endpoint, and renders the results by using a v2 lookup endpoint.

Here’s the flow for each prototype endpoint:

- We define the prototype route. For example, to get the users I’m following, /2/users/by/username/:username/following

- We define the related v1.1 endpoint; in this case /1.1/friends/ids.json

- When the controller receives a valid request, it translates the :username path parameter into v1.1 query parameters. In other words, /2/users/by/username/i_am_daniele becomes /1.1/friends/ids.json?screen_name=i_am_daniele.

- It determines if the v1.1 request succeeded; if not, the controller fails early with an error message.

- If the request succeeded, the list of IDs is passed into a v2 lookup endpoint, in this case /2/users.

- It returns the v2 request verbatim to the user.

We wanted to replicate the v2 developer experience as closely as possible, so we finessed the prototype by adding support for fields, expansions, metrics, and annotations. This came at the cost of making two requests per operation, an acceptable compromise since the prototype is designed for low usage.

As we build the new Twitter API, we found these prototypes to be a valuable tool to offer a tangible understanding of our intended design to the world. They help developers focus on providing meaningful input on the features they want to see in the new API, and it helps us iterate at a faster pace than ever before.